The connectivity, the world has achieved due to social media has a flip-side to it. It has been extensively reported that incidences of spamming by bots and fake accounts on Twitter have been increasing. Such accounts can help trend and spread Fake News and opinions, creating confusions and potentially, spreading rumors. Also, using bots and fake accounts to trend a topic on Twitter or generate artificial likes is becoming commonplace. Twitter, in particular, is one of the worst sufferers of this “tragedy of commons” due to its openness. The talented engineers at Twitter respond with regular product and policy changes in order to curb this menace but the problem still persists. While the arms race between spammers and Twitter wages on, we tried to determine whether AI can help an information seeker stay on top of this game.

To bust fake accounts using AI, we first need to define what constitutes a fake account. We had two hypothesis about the type of fake accounts that could exist. When we tried to bust these accounts using AI algorithms, it turned out both of these hypotheses could indeed help us define accounts as “Fake” with a certain probability. At Karna Analytics (a division of ParallelDots), our Machine Learning research team ran multiple experiments to track these type of accounts and categorised them into two types — “Spammy Users” and “Bot Users” based on their activity and content of their post.

In this blog post, we talk about our approach that we use to detect “Spammy Users” (or “Spammers”) and Twitterbots who post a large amount of malicious and spam content on the platform. We discuss how our approach can be used to improve the quality of research performed using data from social media. We have run our analysis based on data we tracked for two trending hashtags: #Presidentielle (For French Presidential elections 2017, which we predicted correctly using AI) and #Jio (A popular telecom company in India).

Busting Spammers

The Hypothesis: Spammers are not that good at spamming

We observe that getting fake accounts to tweet and increase mentions about a #hashtag and make it trending topic is one of the most common spamming tricks (Google for “Twitter hashtag trending services” and you would know what we mean). From spammers perspective, posting tweets from lots of fake accounts and that too in a quick succession is a challenging task. Ideally, a spammer should be posting tweets which are relevant yet different from each other so as to make the trend look genuine. Our key hypothesis is that achieving this within the constraints of time and money is challenging and potential spammers end up doing little to edit their tweets. As seen below, even celebrities that tweeted about Jio (probably as part of influencer marketing strategy) ended up posting the same tweets.

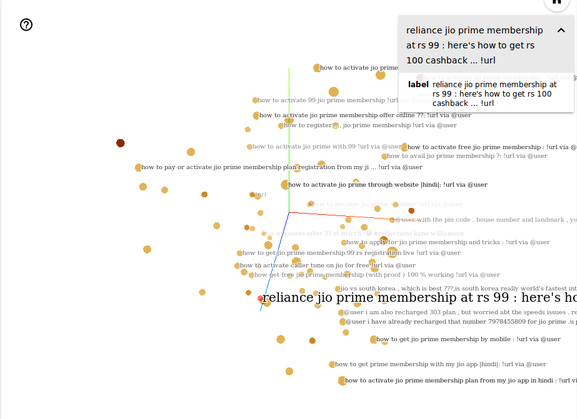

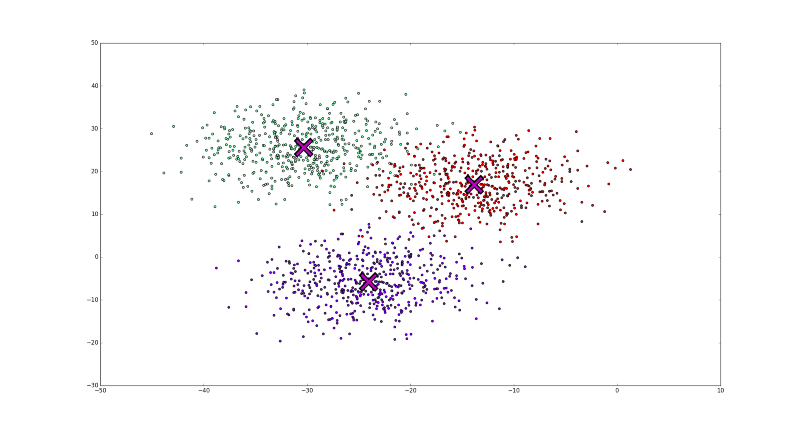

Based on this idea, we have found that spammers can be effectively identified if we look at all the tweets about a topic and figure out the tweets that are contextually very close to each other, made in a very short span of time (~15 minutes). For this, we use our proprietary text analytics algorithm called Semantic Similarity for clustering contextually similar tweets. To take an analogy from the real world, we intend to use AI to closely examine answer sheets of students to identify who has cheated during the exam. For those looking to get some intuition on how this works, we have added below a visualization of how we cluster tweets that are contextually similar.

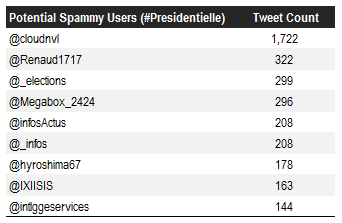

We analysed more than 50,000 tweets for #Presidentialle and #Jio and used Semantic Similarity technique to identify clusters of users that post very similar tweets multiple times. We produced the below list of potential spammers based on the contextual similarity and frequency of their tweets. If you search these users on Twitter, you would notice some users accounts have already been deleted or don’t appear in search results as they were classified as spammers by Twitter as well.

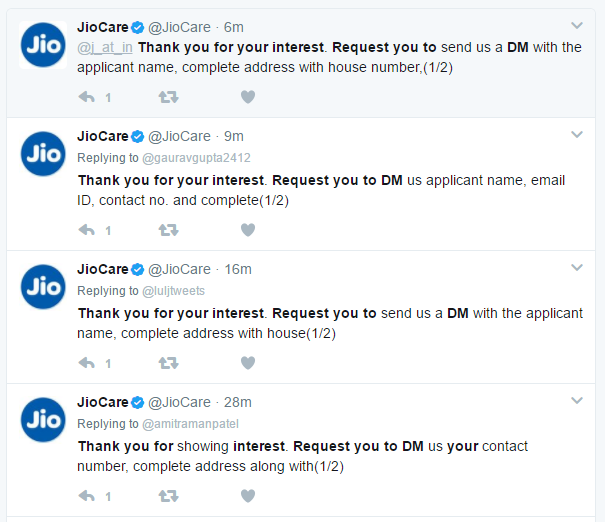

It is important to note that the user ‘@JioCare’ is the customer support handle for Reliance Jio. It is categorized as a potential spammer by our model because of its standard replies to user queries. For example, the handle might reply with a standard note for detailed assessment of query:

As you can see from the lists, the users have tweeted multiple times in the selected time period. The Semantic Similarity clusters the contextually similar Tweets and the handles of such users can be identified.

Why spam filtering is important?

Filtering the spam users allows you to listen to unbiased opinions of the users about a topic and filter the noise created by spammers. We are listing down few use-cases of spam filtering:

- Get unique and unbiased data for analyzing what users are talking about your brand.

- Assess the performance of a particular marketing campaign on Twitter to understand if the tweets have been generated organically or artificially by spammers.

- Get a better understanding of your customer persona by weeding out spammers.

- Political organizations and intelligence agencies can use spam filtering to analyse fake accounts that are spamming and pushing their ideological agenda.

This approach is one of the many that we have successfully tested for finding spammy users. Now, we will discuss how we successfully identified bot users using a similar approach.

Detecting Twitterbots

Twitterbots automate and fasten the content delivery process. A study estimated that the number of active bots on Twitter can be as high as 15% of the total users.

Initially, Twitterbots were made to reduce human effort. Take Netflix Bot for an example. It Tweets whenever a new show or movie is added to Netflix.

There are some extraordinary ones as well. For example, someone has created a very smart online version of the Big Ben, that marks the passing of every hour as shown in the tweet below. Now that humanity is spending more and more of its time online, it would be just a matter of time that our monuments start having an online presence too.

BONG BONG BONG BONG BONG BONG BONG BONG BONG BONG BONG BONG

— Big Ben (@big_ben_clock) May 31, 2017

But, there is a large herd of Twitterbots who post a large amount of malicious and spam content on the platform. I am sure you can find some in your followers list as well. According to Wikipedia, bots had a role to play in the US Presidential Election — 2016 as well.

A subset of Twitter Bots programmed to complete social tasks played an important role in the United States 2016 Presidential Election. Researchers estimated that pro-Trump bots generated four tweets for every pro-Clinton automated account and out-tweeted pro-Clinton bots 7:1 on relevant hashtags during the final debate. Deceiving Twitter bots fooled candidates and campaign staffers into retweeting misappropriated quotes and accounts affiliated with incendiary ideals.

-Wikipedia

Twitterbots and spammers try to cloud the views of other users by constantly promoting fake news and opinions. Given that there is no human effort required, bots can tirelessly keep on tweeting about a topic and help make it trending. For a political analyst, market researcher or anyone else seeking to do in-depth analysis using social media, it is important to identify and filter out these bots to get genuine unbiased opinions.

The Hypothesis

The idea behind our AI-driven approach to identify bots on social media is based on this hypothesis: “Tweets made by bots are related to a very narrow topic/context while humans’ tweets are much more diverse”.

How we did it

To use this approach to automatically identify bots, we crawled the latest tweets posted by a large sample of Twitter accounts. For each account, we converted the Tweet text into vectors and calculated the similarity by checking the average distance metrics for these Tweets. We made sure that the sample of accounts was diverse.

If a handle tweets about the same topic and theme, the tweets(individual data points) will be closely located in the hyperspace due to the semantic similarity. These closely packed similar tweets form a cluster. We can quantify the similarity by calculating the cosine distance between any two data points.

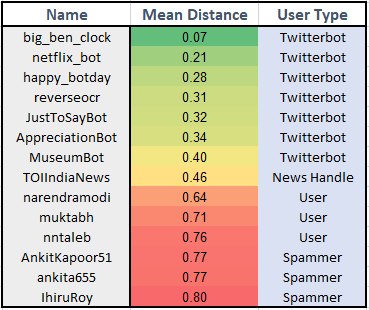

The table below represents the results of the analysis. Here, Mean Distance is the average of all the cosine distances between the individual data points. Lesser the Mean Distance, more similar the Tweets. Clearly, you can infer this from the table. The aforementioned Big Ben Bot has the lowest Mean Distance among the chosen ones as its posts only contains the word ‘BONG’.

We chose a few ‘Spammers’ accounts also so as to highlight the difference between a bot and a spammer. The spammers post about multiple topics time to time but bots post about a specific theme generally. Thus, their Mean Distance is far greater than that of the bot’s. Notice that, the Mean Distance of TOIIndiaNews (leading Indian news publisher) is nearer to the Mean Distance of the bots. Generally, such handles follow a standardized structure to post news. Therefore, it has relatively lesser Mean Distance.

Impacts of bots on the real world

Here are a few cases where Twitterbots were influential and why it is important to identify them.

- The number of followers on social media is considered a popularity metric for celebrities. But is it really? As mentioned earlier, around 15% of Twitter users might be bots. Thus, a number of followers don't come out as a concrete metric for popularity. During 2012 US Presidential Elections, it was reported that Barack Obama’s 29.9% followers might be bots/fake and this number for Mitt Romney was around 21.9%. The number of followers after removing bots and spammers can serve as a better popularity metric.

- Twitterbots have said to influence the opinions of voters by tweeting and retweeting tons of pro-Trump content during 2016 US Presidential Elections. As mentioned earlier, pro-Trump bots generated four tweets for every pro-Clinton automated account and out-tweeted pro-Clinton bots 7:1 on relevant hashtags during the final debate. Some of the content shared by these bots was fake and deceiving. Thus, it becomes really important to clearly identify these bots to get views and opinions of only from real people.

- Recently concluded French Presidential Elections also saw an involvement of bots. Just before the election, a massive 9 GB of classified campaign documents related to Emmanuel Macron were posted online. Twitterbots kept on posting about it and helped in making the topic a trending one for hours before the election. Though, it seems to have had little effect on the outcome as Macron won comfortably (which we predicted correctly using AI).

- Suppose a brand hires a marketing agency for a publicity campaign. However, to judge the efficacy of the campaign it is import to understand whether the virality of the campaign was due to push from spammers/bots. In that case, it might have a negative effect on the brand and the brand will be a fallacy of increased number of followers. These bots are not the real customers. Thus, it is a loss from both ends for the brand.

These are a few notable places where bots have influenced the views of the audience. Though meant for a better role in the social media, bots are now being targeted mostly as spam on Twitter. Social media platforms are constantly being optimized to fight against such menace. Like any other technology, if used ethically, bots can help you in many ways. It can help you in customer support, marketing, and general business development. Interesting times are ahead, as the future holds the door for machine intelligence era. It is up to intelligent AI algorithms to help us phase out spam, bots and fake content from social media platforms.

The above study was carried out by Karna AI, Market Research division of ParallelDots Inc.

ParallelDots AI APIs , are a Deep Learning powered web service by ParallelDots Inc, that can comprehend a huge amount of unstructured text and visual content to empower your products. You can check out some of our text analysis APIs and reach out to us by filling this form here or write to us at apis@paralleldots.com